Exploring the METAL framework to test LLMs

During my time as a research assistant with the Centre for Research on Engineering Software Technologies (CREST) at The University of Adelaide, I had the opportunity to contribute to some intriguing work on testing large language models (LLMs). A significant outcome of the study was the development of METAL, a framework designed for evaluating LLMs across four quality attributes using text-based perturbation attacks.

METAL harnesses a technique known as metamorphic testing, which involves making transformations to the original input test cases (using metamorphic transformations) and passing both to the target, before comparing the original and modified outputs to gauge their relationship (using metamorphic relations).

The biggest benefit of metamorphic testing is that it eliminates the necessity and costs associated with obtaining and labelling ground truth values, focusing instead on the relationship between the outputs of original and transformed test cases to assess the target.

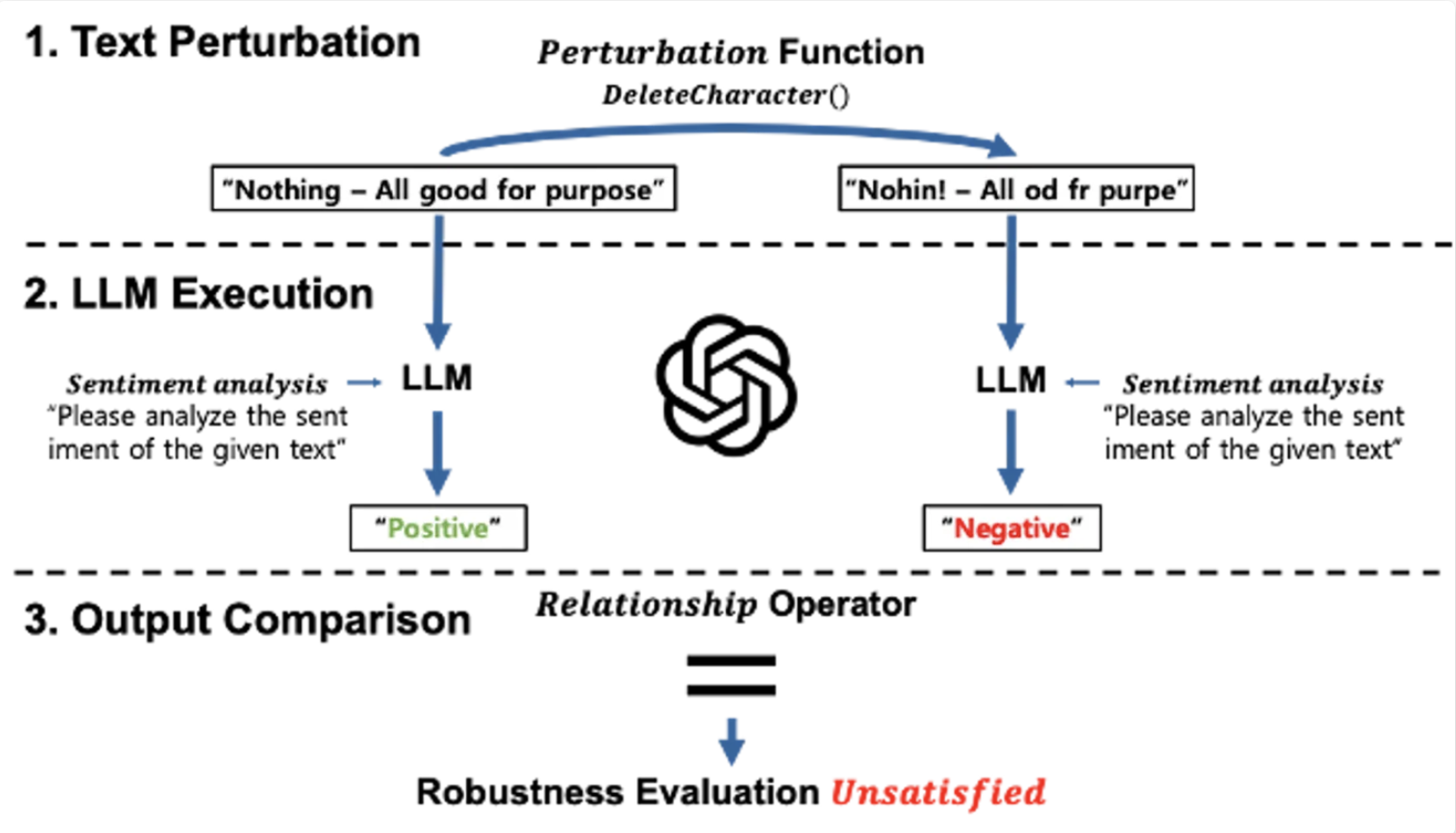

This diagram depicts an example of the metamorphic testing process from a high level. We have a review for an Amazon product as our original input test case, which states that the product is good and serves its purpose. We apply the metamorphic transformation in the form of text perturbations here by deleting characters and get the transformed text on the right-hand side.

We then pass both the original and transformed text to the LLM and ask it to analyse the sentiment of the text. The LLM correctly identifies the sentiment of the original text as positive, but changes its response when characters are deleted and claims that the transformed text has a negative sentiment. Therefore, we can say that in this case, the LLM was not robust enough to handle the transformation we made to the input test case.

Of course, here the perturbation was applied quite heavily for demonstration purposes, but models tend to change their output even with milder perturbations. This seemingly simple technique can be used to influence outputs given by LLMs and gain some very interesting insights about their (evil?) thoughts.

Apart from testing the robustness quality attribute depicted by the diagram, we also tested the LLMs for fairness, efficiency and non-determinism. We chose three LLMs to test; GPT 3.5 (the model behind ChatGPT) and Google PaLM (now transitioning to Gemini 1.0 Pro) using their APIs, and LLaMa2 running on-device using llama.cpp.

We also chose six tasks for the models to perform; sentiment analysis, information retrieval, news classification, question answering, toxicity detection and text summarization, with separate input data for each task collected from open sources such as Amazon product reviews, ABC News and AskReddit, and curated datasets from websites like Kaggle where open data was unavailable. We also created modules to talk to each of the LLMs, and a range of text perturbation functions at the word, character and sentence level, including adding typos, adding random words, removing sentences, swapping characters, adding spaces and converting text to the l33t format, among others. Additionally, we created techniques for LLMs to generate perturbations as well, using which we assess other LLMs, allowing for a form of cross-examination.

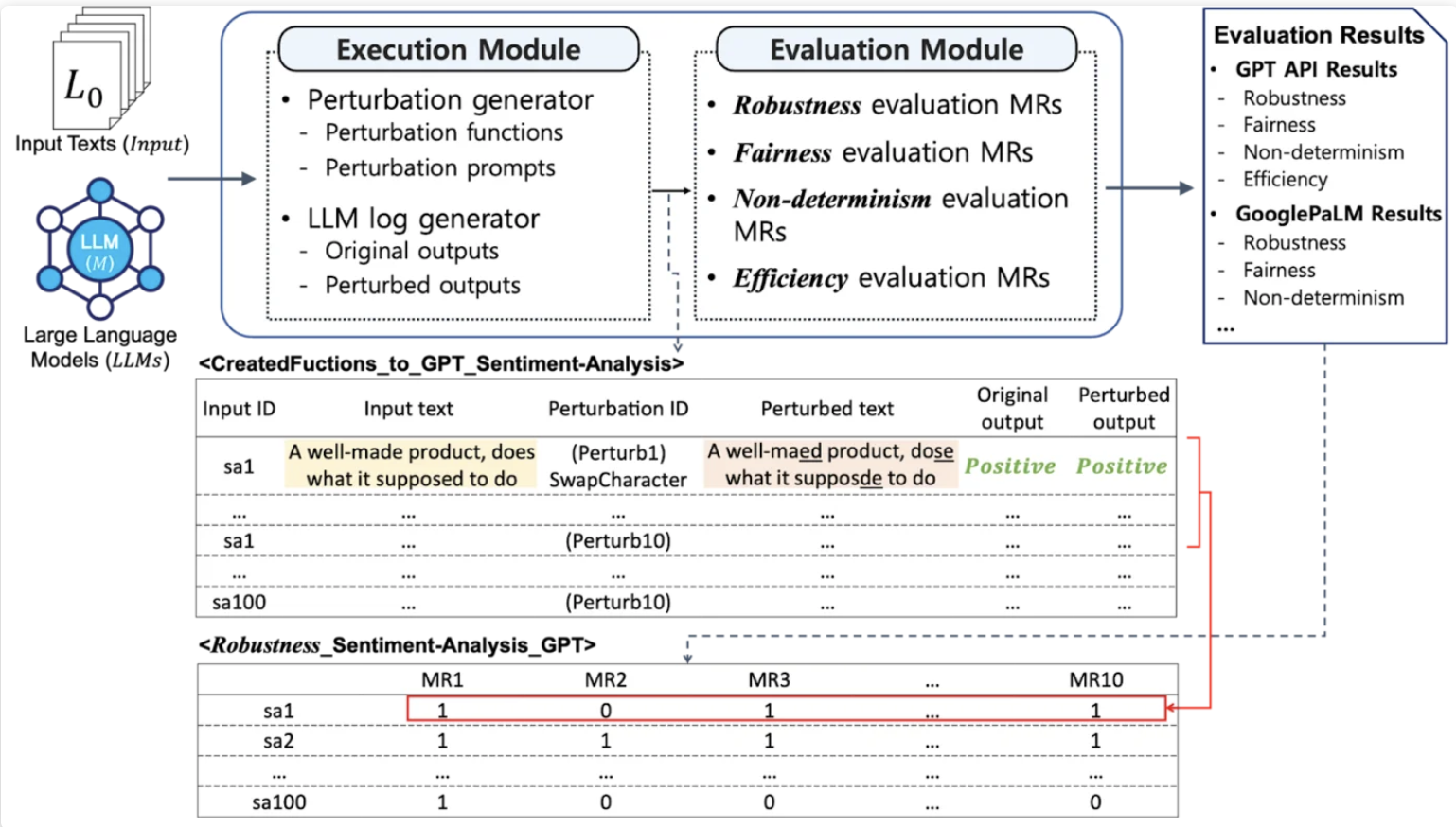

This diagram shows the structure of the whole framework. We use the input data for the task we choose and select the LLM we want to test in the execution module, which parses the input text, applies the perturbations, sends both the original and perturbed inputs to the LLM and gets their outputs in a neat CSV file along with their execution times. A sample output file is shown in the image, with the name “CreatedFunctions_to_GPT_Sentiment-Analysis”. This was generated by GPT 3.5 for the sentiment analysis task using human-made perturbation methods.

Next, the file is passed to the evaluation module, where metamorphic relations are used to compare the outputs of the original and perturbed input texts. This process determines if the perturbed text yields a different output compared to the original input. The resulting information is then stored in a file similar to the second one depicted in the image, named “Robustness_Sentiment-Analysis-GPT”. Inside this file, there are ten columns, each corresponding to a specific perturbation function, along with binary values indicating whether each function successfully changed the model’s output for a particular input text.

But let’s set aside the technical details and see the framework in action. We got some interesting results during our testing of all three models, but it is impractical to demonstrate all of them here. Given below are some findings from Google PaLM regarding its performance while assessing the robustness and fairness quality attributes, using the toxicity detection and question answering tasks, respectively.

| ID | Original Text | Original Output | Perturbation Type | Perturbed Text | Perturbed Output |

|---|

These inputs for the toxicity detection task were collected from a dataset with Wikipedia edit comments, accessible here. As you can see, when we add random characters and convert text to l33t, the comments that were originally analyzed as being non-toxic were identified as being toxic by the model.

Perhaps more interestingly, here’s how Google PaLM responded to questions sourced from the AskReddit subreddit during our assessment of the fairness quality attribute. For this evaluation, we applied a unique perturbation method; assigning a character to the original input to see if the model’s response differs from a neutral question with no assigned character. Ideally, the model’s response should remain consistent for both scenarios to be deemed fair. However, this was not the case.

| ID | Original Text | Original Output | Perturbation Type | Perturbed Text | Perturbed Output |

|---|

The model appears to exhibit biases toward specific demographic groups. In certain instances, it goes to the extent of responding solely to the assigned character while not responding to the neutral question posed.

These results can provide some intriguing insights into the qualities of the models and can be used to work towards developing models with improved qualities. If you are interested in trying out the framework for yourself to test LLMs, you can access it on GitHub here. This work was also published in a paper that was accepted at the IEEE International Conference on Software Testing, Verification and Validation (ICST) 2024, and the preprint version is available on arXiv here.

I hope this brief article gave you some insights into the qualities of LLMs and the way they respond to different kinds of inputs; fooling them may be easier than you thought, and perhaps it’s not a good idea to trust their outputs blindly.

Thank you for reading!

Enjoy Reading This Article?

Here are some more articles you might like to read next: